Every fast-growing SaaS platform eventually faces the same reality: scaling a single massive database becomes increasingly risky and expensive.

At Bling (Part of the LWSA Group), a leading SaaS ERP platform serving the e-commerce markets in Brazil and Mexico, we hit this ceiling hard. Founded in 2009, Bling powers over 300,000 daily active users and integrates with major players like Amazon, Shopee, and Mercado Livre. With our tables doubling in size annually, simply adding bigger servers was no longer an option. We needed a fundamentally new approach.

This is the story of how we migrated a 25TB mission-critical database to TiDB, and the key lessons we learned along the way.

The Breaking Point

We were operating at a scale that pushed our traditional architecture to its practical limits for our growth trajectory. Our multi-tenant SaaS platform ran on AWS, powered by massive MariaDB/MySQL instances—some with over 190 vCPUs and 1 TB of RAM. Yet, even with this infrastructure, we were facing:

- Database size: ~25 TB per instance with tables containing 2–5 billion rows.

- Replication strain: 15 replicas (8 full, 7 partial) managing peaks of 350,000 QPS.

- Operational drag: Routine schema changes on large tables took over 50 hours to complete, even using “online” tools like gh-ost.

We were experiencing the well-known limitations of a monolithic architecture: centralized write contention, architectural bottlenecks, and increasing costs with diminishing performance gains. At that stage, incremental scaling was no longer sufficient to support our growth.

Evaluating Alternatives

Once we decided to move, we established a dedicated team to evaluate the best technologies in the MySQL ecosystem.

Amazon Aurora was our first consideration due to its managed nature. However, it was quickly ruled out because it couldn’t scale writes horizontally, the very bottleneck we needed to solve.

Vitess was a stronger contender. We invested six months exploring it as a sharding layer. While powerful, the “deal-breakers” became evident:

- It required disruptive data modeling changes and significant application rewrites.

- Its reliance on eventual consistency posed unacceptable risks to our business logic.

- There was no safe rollback path to a traditional MySQL setup.

The combination of operational complexity and high migration risk meant that Vitess did not align with our goals.

Discovering TiDB

Our breakthrough came from an unexpected experiment. On a rainy Saturday afternoon, one of our engineers, frustrated with the complexity and limitations of Vitess, decided to dig deeper into alternative solutions for scaling MySQL. Eventually, he came across TiDB, an open-source, distributed SQL database.

He spun up a local cluster, connected our application, and to our surprise, it worked almost immediately without extensive rewrites. This highlighted TiDB’s key advantages:

- MySQL protocol compatibility: A drop-in replacement for the application layer.

- Horizontal scalability: Scaling both reads and writes by adding nodes.

- Strong consistency: ACID compliance across distributed nodes.

- HTAP capabilities: Handling OLTP and OLAP workloads on the same data.

What started as a weekend experiment quickly became a turning point, and our first tests were remarkably promising. TiDB’s compatibility wasn’t just a convenience; it was our migration superpower. It allowed us to adopt a modern, cloud-native platform without rewriting our entire application.

Turning Decision into Action

With the results of our initial tests in hand, the next question was inevitable: Should we move to TiDB? The answer came quickly: Yes!

However, what truly solidified our choice wasn’t just the raw technology, but the ecosystem surrounding it. The comprehensive documentation, rich library of tutorials, and the TiDB.ai assistant significantly accelerated our learning curve. Equally important was the vibrant community and the wealth of case studies from other companies that had solved similar scaling challenges. These factors gave us the confidence that we weren’t just adopting a database, but joining a mature ecosystem.

We translated this decision into a four-phase execution roadmap designed to minimize risk:

1. Prepare & Validate

- Provisioned a self-hosted TiDB cluster.

- Ran a 3 TB POC with 100+ critical queries to verify compatibility (requiring only minor adaptations).

2. Bulk Load & Sync

- Executed export/import via Dumpling and TiDB Lightning.

- Established continuous synchronization using TiDB Data Migration (DM).

3. Read-First Rollout

- Introduced TiDB as a read replica to gradually shift production traffic.

- Leveraged TiDB Operator on Kubernetes for elastic, zero-downtime scaling.

- Decommissioned legacy MySQL replicas as confidence grew.

4. Write Cutover & Hypercare

- Executed the full write-traffic cutover with a verified rollback plan.

- Entered a two-month hypercare period for intensive monitoring, tuning, and stability assurance.

Scaling Redefined

The migration marked a fundamental shift from a “scale-up” ceiling to a “scale-out” distributed model.

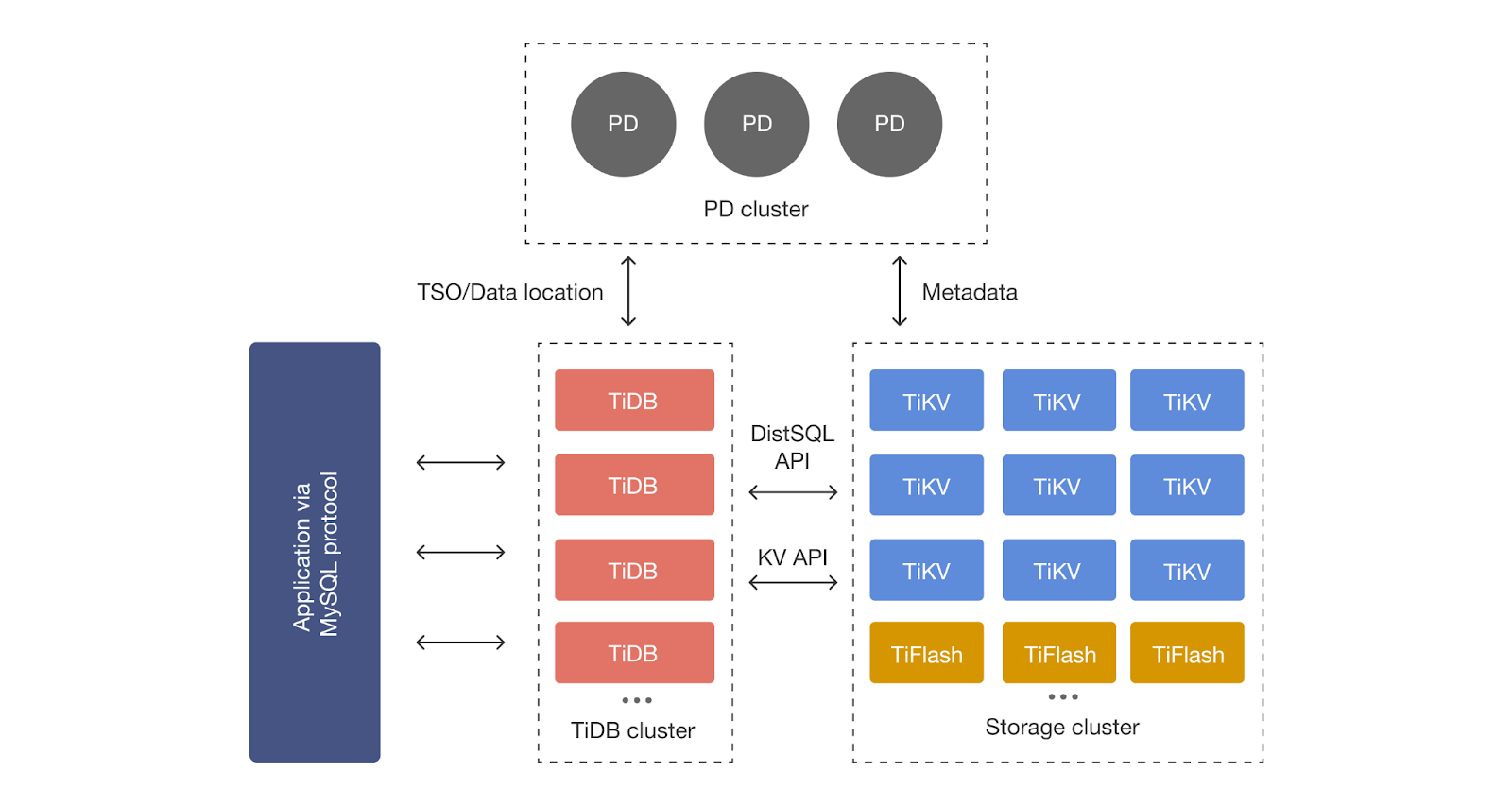

Our legacy setup consisted of a few, massive instances. In contrast, the new TiDB topology is composed of many smaller, distributed nodes, introducing one of TiDB’s core strengths: the separation of compute and storage.

- TiDB layer acts as a stateless SQL processing engine, responsible for parsing, optimizing, and executing queries.

- TiKV provides the distributed key-value storage layer, handling replication, fault tolerance, and transactional consistency across nodes.

- Placement Driver (PD) acts as the cluster’s control plane, maintaining global metadata, handling leader election, and orchestrating data placement. It continuously monitors the cluster to balance regions and leaders across TiKV nodes, ensuring high availability, fault tolerance, and efficient resource utilization.

We deployed TiDB on Kubernetes using TiDB Operator, separating compute and storage across TiDB, TiKV, and PD. A parallel Dumpling + Lightning pipeline imported 25 TB of data into TiDB in 12 hours, landing at ~16 TB logical size thanks to compression, with DM keeping MySQL and TiDB in sync.

Production Tuning & The Final Cutover

Introducing TiDB into production required a shift in mindset regarding optimization.

Key Technical Adjustments:

- Storage I/O: Unlike MySQL’s reliance on memory buffers, TiDB relies heavily on disk reads, so we tuned our storage volumes for higher throughput and IOPS.

- Resource groups: Solved the “noisy neighbor” problem by prioritizing critical workloads.

- Compatibility tweaks: Applied settings like auto_id_cache=1 for sequential IDs and added explicit ORDER BY clauses to handle distributed non-determinism.

Observability tools like TiDB Dashboard and Grafana were game-changers, allowing us to spot slow queries instantly. They offered deep, real-time insights into cluster health, query behavior, and execution details, enabling our team to quickly identify bottlenecks and performance opportunities.

With these optimizations, queries that previously took tens of minutes on MySQL were executed in seconds on TiDB in several cases.

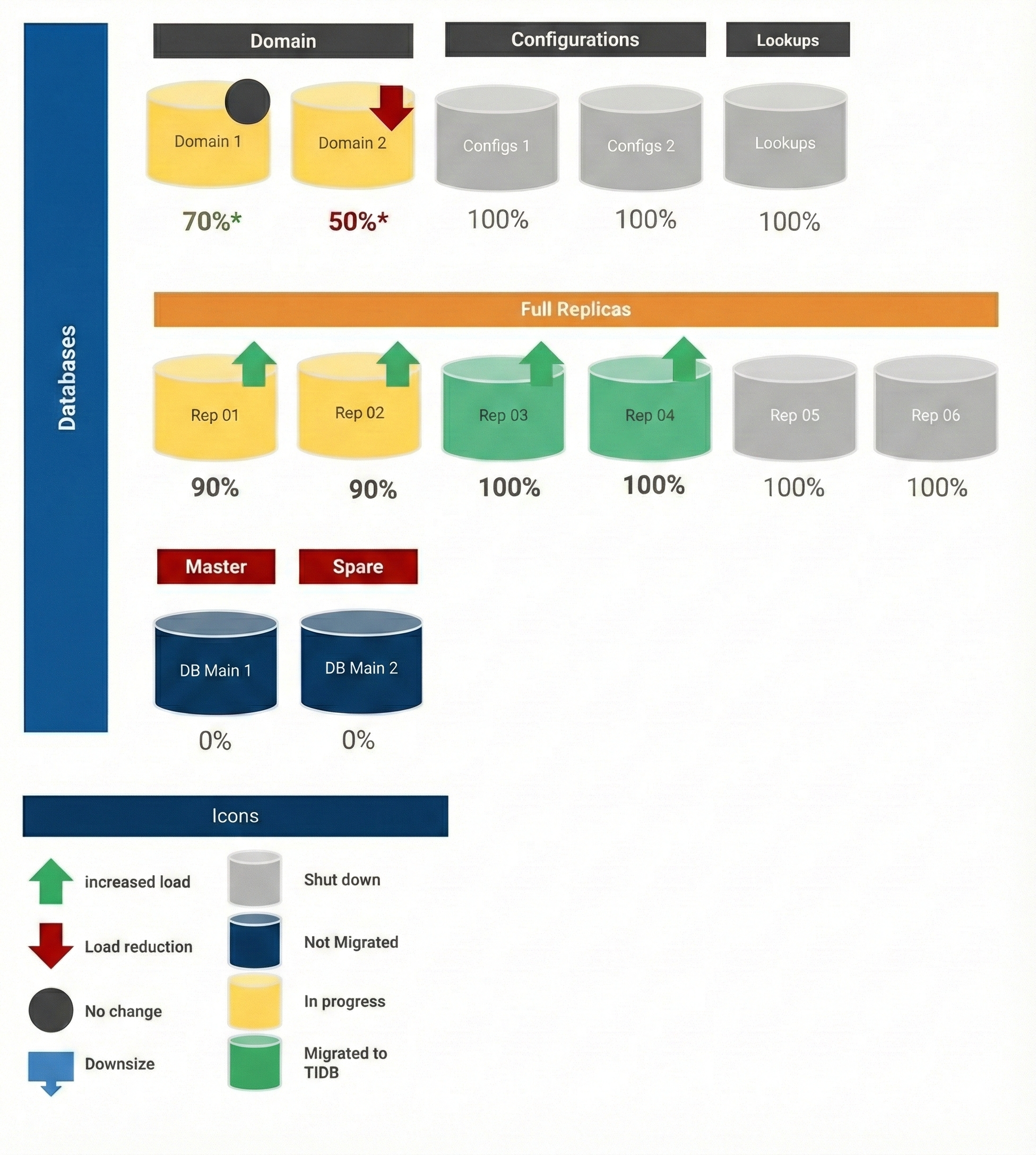

TiDB was initially introduced as a read replica to serve less critical workloads. As confidence grew and more queries were optimized, we progressively shifted more read traffic to TiDB while decommissioning MySQL replicas.

The migration project concluded with a critical cutover operation involving over 50 engineers. During a planned five-hour maintenance window, we successfully switched write traffic to TiDB and reversed the replication flow (making MySQL the downstream backup).

Following the switch, we continued to closely monitor the cluster, achieving excellent results during subsequent ‘trials by fire.’ Notably, during the Black Friday period—our highest operational volume of the year—TiDB proved to be incredibly robust and resilient, handling the massive load with stability.

Results: Bling’s Migration by the Numbers

Here’s a quick snapshot of what it took to get our migration over the finish line:

- Duration: 10 months end-to-end execution.

- Scale: 50 TiKV nodes and 15 TiDB instances at peak.

- Effort: Over 300 pull requests and 1,000+ optimized queries.

- Data: 25 TB migrated and compressed into 16 TB.

TiDB significantly improved our operational efficiency. We can now scale nodes and adjust disk configurations without service interruptions or downtime, giving us far greater flexibility in production.

Schema changes saw substantial gains: index creation dropped from hours to minutes, and most ALTER operations now complete in seconds, enabling faster and safer database evolution.

TiDB also proved highly resilient under load, sustaining over 50,000 concurrent connections during peak traffic — a dramatic increase compared to the ~12,000 supported by our previous write database. This removed a recurring source of application instability and strengthened overall platform reliability.

Final Thoughts

Migrating to TiDB didn’t just modernize our infrastructure; it reshaped how we build and operate Bling at scale. By moving from a monolithic database to a distributed architecture with MySQL compatibility at its core, we unlocked predictable growth, faster operations, and a sustainable path forward for our platform.

Throughout this journey, the TiDB community played a critical role, providing guidance, sharing real-world experiences, and collaborating closely with our team. This engagement was so impactful that one of our engineers was recognized as a TiDB Champion for actively contributing across community channels.

Looking ahead, we still have work to do. A small subset of workloads is still in the process of being migrated, and we continue refining our approach to fully integrate these use cases into the new architecture. In parallel, we are actively exploring strategies for automatic compute autoscaling, aiming to dynamically adjust the SQL layer capacity based on real-time workload patterns.

As TiDB continues to evolve, we remain closely aligned with new releases, continuously evaluating enhancements and optimizations that can further strengthen our platform. One development that has particularly caught our attention is TiDB X, the latest version of TiDB that promises a significant leap forward in performance, scalability, and architectural capabilities.

Ready to stop scaling up and start scaling out? Book a 30-minute architecture review to migrate large MySQL and MariaDB workloads with minimal rewrites, a clear rollback path, and predictable scale-out performance.

Experience modern data infrastructure firsthand.

TiDB Cloud Dedicated

A fully-managed cloud DBaaS for predictable workloads

TiDB Cloud Starter

A fully-managed cloud DBaaS for auto-scaling workloads