Founded in 2014 in Singapore, Ninja Van is a leading last-mile logistics provider operating across six countries in Southeast Asia: Singapore, Malaysia, Indonesia, the Philippines, Vietnam, and Thailand — with nationwide coverage in each market.

Ninja Van’s platform handles logistics for top-tier e-commerce players such as Amazon and Lazada. Offering services that span from parcel pickup to delivery, returns, tracking, and customer notifications; all of which depend on a highly distributed, data-intensive technology stack.

At TiDB User Day India, Mani Kuramboyina, Head of Engineering at Ninja Van, discussed how the logistics powerhouse navigated scaling challenges and unlocked the power of hybrid transactional and analytical processing with TiDB. This blog recaps Kuramboyina’s talk from the event.

Phase 1: Growing Pains in a Sharded World

In its early years, Ninja Van ran MySQL with Galera clustering, a popular choice for multi-master replication. It served them well, at least at first.

However, what started with manual tooling soon became a 130TB hybrid architecture powering over 3.2 million queries per minute, across 10,000+ operational users.

But as the business scaled:

- Write-intensive operations started overwhelming Galera nodes.

- Flow control issues under high load led to replication bottlenecks.

- Adding new nodes triggered State Snapshot Transfers (SSTs) — heavy, disruptive operations.

- Schema changes required gh-ost or pt-online-schema-change, often throttled manually.

To cope, Ninja Van introduced ProxySQL to direct traffic intelligently, routing reads and writes with regex-based query rules. But this came at a cost:

- Complex routing logic had to be baked into the app layer.

- Sharding logic became harder to maintain as business needs evolved.

- Operational complexity exploded as teams had to maintain hundreds of MySQL instances with uneven resource utilization.

The cost of scale wasn’t just hardware, it was human effort.

Fig. 1: Mani Kuramboyina, Head of Engineering at Ninja Van presenting at TiDB User Day India

The Breaking Point: Operational Intelligence Gets “Too Smart”

With millions of deliveries per day, real-time data isn’t a nice-to-have, it’s critical. Ninja Van’s data platform powers everything from shipment tracking and customer notifications to internal analytics, fraud detection, and warehouse optimization.

By 2020, the data team was no longer just supporting services; they were powering an entire operational intelligence ecosystem.

Ops teams, business users, and analysts were crafting complicated SQL queries, often hundreds of lines long, to build dashboards, perform ad hoc analytics, or troubleshoot logistics issues in real time.

These users needed:

- Real-time or near-real-time data

- Support for lookups, aggregates, and wildcard searches

- Access through self-service tools like Redash and Metabase

But MySQL read replicas weren’t built for that as:

- Queries timed out or failed.

- Replication lag made data stale.

- Scaling required spinning up hundreds of replica nodes, with limited ROI.

- Even auto-scaling groups of 100+ servers couldn’t meet demand.

The architecture became a four-tier maze of replication and proxies that were resilient but unsustainable.

Enter TiDB: Testing the Waters with an Operational Data Store (ODS)

With traditional solutions reaching their limits, Ninja Van began evaluating distributed SQL databases. Their requirements included:

- MySQL compatibility (60,000+ existing queries)

- Horizontal scalability for both reads and writes

- Built-in support for online transactional processing (OLTP) and online analytical processing (OLAP) workloads

- CDC support to feed a growing data lake

- Kubernetes-native operations

- Strong query performance under concurrency

After testing several options, including Vitess, CockroachDB, and AliCloud ADB, the company chose TiDB.

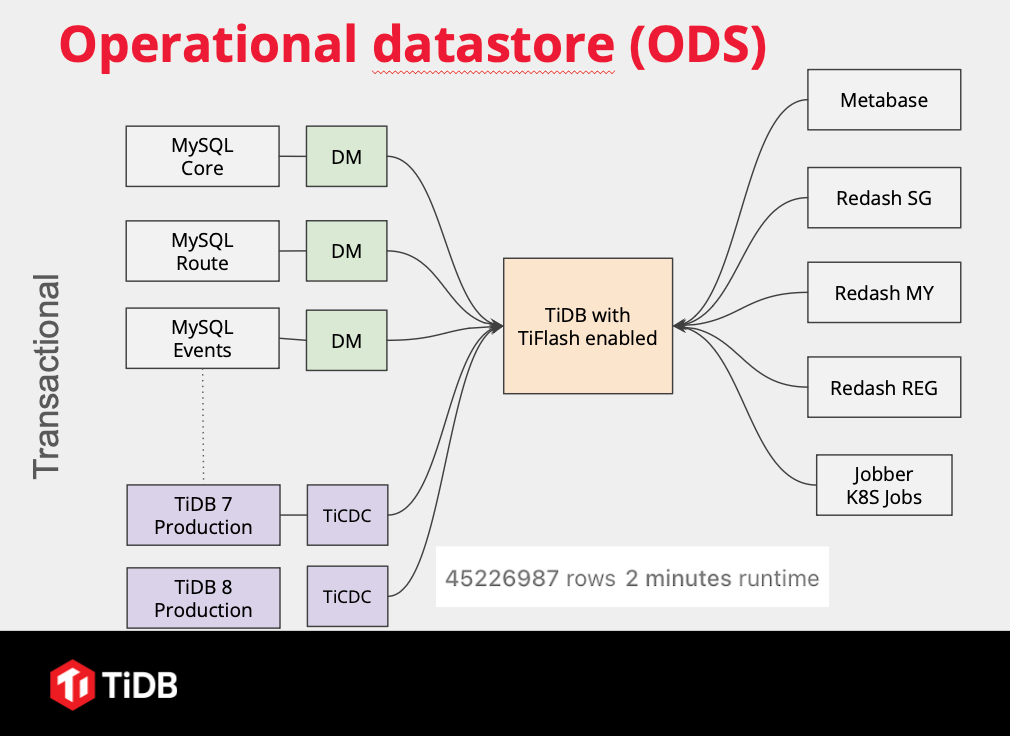

Why start with the Operational Data Store (ODS)?

When Ninja Van began evaluating distributed SQL solutions, they didn’t jump straight into migrating their core systems. Instead, they chose to test the waters with their Operational Data Store (ODS), a key component of their analytics infrastructure that, while important, didn’t have the same latency or uptime requirements as their transactional workloads.

This made the ODS an ideal testing ground for TiDB.

Here’s why the team chose to begin here:

- It was critical, but not as latency-sensitive as customer-facing systems.

- It housed complex analytical queries that could benefit from TiDB’s HTAP (Hybrid Transactional and Analytical Processing) architecture.

- It provided an opportunity to offload heavy read and aggregate workloads from their main MySQL clusters using TiDB’s built-in column store.

Once the decision was made, the Ninja Van team carefully architected their first TiDB deployment with the following components:

- Replication via TiDB Data Migration (DM) to keep the ODS in sync with existing MySQL sources.

- A shared TiDB cluster serving all regions, with resource control in place to prevent noisy neighbor problems.

- Columnar storage nodes to accelerate analytical workloads and reduce pressure on transactional queries.

- Role-based access controls to securely support more than 10,000 Redash users across departments.

By starting with the ODS, Ninja Van was able to validate TiDB’s performance, scalability, and manageability without disrupting mission-critical services.

Fig. 2: Operation Data Store for Ninja Van

The result? Real-time insights at scale. Queries that previously took minutes or failed altogether could now complete in seconds.

Phase 2: Transactional Workloads- The Real Test

Having seen strong early results with their Operational Data Store (ODS), the Ninja Van data team decided it was time to take the next leap: moving transactional workloads to TiDB.

But this was a whole different level of complexity.

Unlike the ODS, transactional systems were at the core of Ninja Van’s logistics platform. These services powered real-time deliveries, parcel tracking, order management, and e-commerce partner integrations. There was zero margin for error, and certainly no tolerance for downtime.

To make matters more challenging:

- Over 180 microservices depended on MySQL backends.

- Many of these services had low-latency requirements with strict SLAs.

- A full migration had to be gradual and non-disruptive — no “big bang” rewrites.

Despite the high stakes, TiDB passed with flying colors.

Why TiDB Worked for Transactional Systems

TiDB brought immediate value where traditional tooling had failed:

- Schema changes were now fast and non-blocking — gone were the days of carefully throttled gh-ost jobs.

- Native MySQL compatibility meant services could migrate one-by-one, with no need to rewrite thousands of queries.

- Built-in Change Data Capture (TiCDC) seamlessly fed downstream consumers like the data lake, Kafka pipelines, and real-time dashboards.

- Backups, restore processes, and TTL-based archival became simpler and safer — no more fragile homegrown scripts.

- And most impressively, query throughput hit 44,000 QPS, proving TiDB could handle even the most demanding transactional workloads.

Where they previously relied on manual operations, ad hoc scripts, and a patchwork of tools, TiDB delivered a unified, production-ready platform with out-of-the-box tooling and enterprise-level resilience.

“It’s no longer a question of whether TiDB can scale — it’s a question of what else we can do with it.”

Mani Kuramboyina, Head of Engineering (India), Ninja Van

Developer & Platform Experience: A Paradigm Shift

The impact wasn’t just on data performance. TiDB reshaped the engineering workflow behind the scenes.

Before, infrastructure was a fragmented landscape of shell scripts, Ansible playbooks, and cloud console clickops. With TiDB, the team consolidated everything into a clean, repeatable, Infrastructure-as-Code approach.

- Deployments are now fully automated using Terraform, Terragrunt, and Kubernetes manifests.

- CI/CD pipelines manage versioning, updates, and cluster provisioning across environments.

- All TiDB upgrades are first tested on the ODS, ensuring stability before they’re promoted to production workloads.

- Monitoring and observability saw a dramatic upgrade, with built-in integration to Grafana, Prometheus, and alerting stacks.

But perhaps the most astonishing part?

The entire TiDB infrastructure, spanning over 130TB of data, serving millions of queries per minute, is operated by a platform team of just two engineers.

That level of simplicity and operational leverage is rare at this scale and a testament to the maturity of both TiDB and the team behind it.

The Road Ahead

Ninja Van’s journey with TiDB is far from over. The team is actively planning for their next phase and the focus areas include:

- Migrating to ARM-based clusters for better performance-per-cost — early tests show promising savings compared to traditional chipsets.

- Improved migration tooling, enabling faster, safer transition for the remaining MySQL workloads still in production.

- Advanced resource control strategies to fine-tune performance across business units, regions, and internal analytics teams.

- Scaling TiDB to 200TB and beyond, with data volumes growing by 200GB every single day.

Watch the complete session replay from TiDB User Day India 2025 and hear how Ninja Van scaled operational intelligence and transactional workloads with TiDB. Mani Kuramboyina, Head of Engineering, shares their journey, lessons, and what’s next.

Ready to simplify scale like Ninja Van?

Start a free TiDB Cloud trial and see how distributed SQL handles high-throughput transactions, real-time analytics, and zero-downtime operations — without sharding pain or operational sprawl.

Experience modern data infrastructure firsthand.

TiDB Cloud Dedicated

A fully-managed cloud DBaaS for predictable workloads

TiDB Cloud Starter

A fully-managed cloud DBaaS for auto-scaling workloads